Dozens of major tech companies from countries including the US, the UK, China, and Israel are engaged in the development of artificial intelligence systems with possible military applications, with some of them creating products which can be used in lethal autonomous weapons systems, a study by Netherlands-based NGO PAX has found.

Analysing tech companies’ potential involvement in the creation of such weapons, also known as ‘killer robots’, PAX defines these systems as arms which are “able to select and attack individual targets without meaningful human control.” The advocacy group warns of the “enormous effect” these technologies can have “on the way war is conducted,” given what PAX says is the implicit agreement to “delegate[e] the decision over life and death” to a machine.

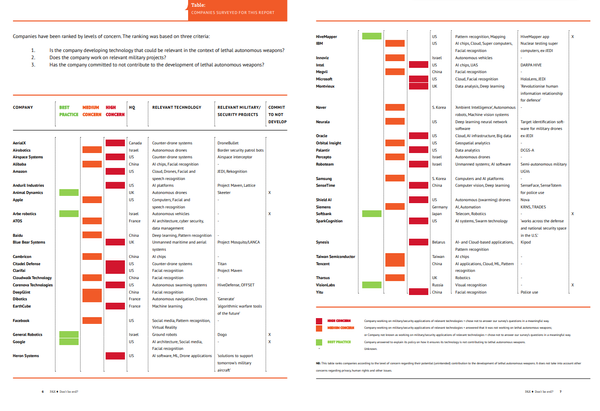

PAX surveyed fifty major tech companies from 12 countries engaged in AI systems, asking them about the extent to which they were or were not involved in the creation of lethal autonomous weapons. They concluded that 21 of these companies were engaged in projects which they deemed of ‘high concern’, with 22 other firms listed as ‘medium concern’, and just seven given a ‘best practice’ rating (meaning they were not engaged in the development of technology which can be used for lethal autonomous weapons).

13 of the 22 ‘high concern’ companies are US-based, and include Amazon, Intel, Microsoft, and Oracle. Other countries said to be engaged in projects which put them in the ‘high concern’ category are the UK’s Blue Bear Systems, which is working on the Project Mosquito unmanned combat drone, Canada’s AerialX, working on the DroneBullet project, France’s EarthCube, working on ‘algorithmic warfare tools of the future’, China’s Yitu, working on facial recognition for security use, and Israel’s Roboteam, engaged in the creation of unmanned systems and AI software for semi-autonomous military vehicles.

Palantir, a US-based tech firm working on data analytics, funded by the CIA was also listed as ‘high concern’ over its work on AI for the analysis of intelligence information collected from spy drones.

Other tech giants in the list include Alibaba, Apple, Baidu, Facebook, IBM, Samsung, Siemens and Taiwan Semiconductor, which are similarly said to be working on military and security-related technologies and applications, even if not directly on lethal autonomous weapons.

VisionLabs, a company engaged in visual recognition technology, was the only Russian AI tech firm to make the PAX survey’s list, and received a ‘best practice’ grade for convincingly explaining “its policy and how it ensures its technology is not contributing to lethal autonomous weapons.”

Japanese Robotics company Softbank, developers of the Pepper robot, also got a ‘best practice’ ranking.

Frank Slijper, the survey’s lead author, said it was disconcerting that the major US tech giants were engaged in the development of these technologies.

“Why are companies like Microsoft and Amazon not denying that they’re currently developing these highly controversial weapons, which could decide to kill people without direct human involvement,” he was quoted by the AFP as saying.

Scalable WMDs

Stuart Russell, a computer science professor from the University of California, Berkeley, warned that the future of AI-based weapons systems is the status of “scalable weapons of mass destruction, because if the human is not in the loop, then a single person can launch a million weapons or a hundred million weapons.”

“Anything that’s currently a weapon, people are working on autonomous versions, whether it’s tanks, fighter aircraft, or submarines,” Russell warned. Furthermore, he postulated that the creation at some point in the future of smaller AI-based weaponised drone systems could be used to engage to engage in literal genocides, wiping “out one ethnic group or one gender, or using social media information [to] wipe out all people with a [certain] political view.”

PAX offered several recommendations for tech companies to prevent their products from contributing to the creation of lethal autonomous weapons, urging firms to make a public commitment not to do so, establishing “a clear policy” on the matter, and allowing for open discussion around any concerns among company employees.